January 21, 2020 | aws | by: Colin Dellow

In a perfect world, everyone would enjoy a fast Internet connection. In practice, slow residential DSL or flaky wi-fi can make your Internet connection unusable while you upload a big file. This motivated us to create s3patch, our tool for patching files on S3. At the time, we were uploading Scala-based JAR files to S3 as part of our deploy process [1] to AWS Lambda [2].

Just have a look at the difference in upload times for a 50 MB file between a fast, datacenter connection and a slow, 1.5 Mbps residential DSL connection:

$ time aws s3 cp ./test.jar s3://code402/test.jar upload: ./test.jar to s3://code402/test.jar

real 0m2.375s

$ time aws s3 cp ./test.jar s3://code402/test.jar upload: ./test.jar to s3://code402/test.jar

real 5m43.218s

It's 140x slower! We know that most of the time, a new version of the JAR file has only a few changes. Wouldn't it be great if we could just send the changes? Instead of uploading the whole file each time, we'd send that smaller set of changes to a server in the cloud, which would reconstitute the new file and upload it to S3.

Stand On The Shoulders Of Giants

"Patch" tools that can extract small changes exist for text documents. In fact, they form the basis of the modern programmer's source control workflow. It turns out similar tools also exist for binary files! We explored two tools in particular: bsdiff, by Colin Percival, and xdelta3, by Josh MacDonald. We put them through their paces by creating patches for the 190 most popular Java frameworks on the Maven Repository.

Ideally, we'd like to see a tool whose dots cluster in the lower left corner. The lower the dot, the smaller the patch. The more leftward the dot, the faster it was to generate the patch. Unfortunately, we can see immediately that bsdiff isn't a good candidate. Many of its patches took more than 10 seconds to create, and one took almost a full minute. In a minute, even a slow Internet connection can upload several megabytes.

Helping xdelta3

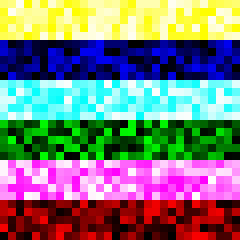

That leaves xdelta3 as our best candidate. Although it's reliably quick, we notice that many of its patches aren't that small. To understand why, it's necessary to break down what's actually happening. A JAR file gloms a bunch of Java class files together and then compresses them using the Zip format. Depending on the order of the files, the final JAR file may be wildly different. To illustrate that, consider this visualization of two uncompressed archives that each contain the same six files, but in different orders:

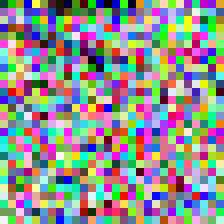

It's easy to see that they're very similar. You could tell someone how to construct the second image from the first: order the bands as yellow, blue, cyan, green, pink, red. But when we then compress the files, the picture has changed:

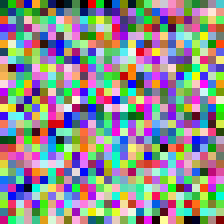

In fact, the compressed files are completely different! This is because the output of a general purpose compression algorithm like the Zip algorithm is heavily dependent on the order of its inputs [3]. Luckily, this suggests a way to improve our patch sizes: patch an uncompressed archive of the contents of the JAR file, for example, in a tar archive. We can then optionally compress the patch with a general purpose algorithm. By adopting this approach, almost 70% of patches weigh in at less than a third of the original JAR's size:

There is a drawback with this approach: while the final file will be semantically equivalent to the original file, it may not be a perfect byte-for-byte copy. In many applications, this is OK, but it's worth noting.

Conclusion

We ultimately decided to adopt a vanilla xdelta3 approach. This avoids the concerns about byte-for-byte equality. More importantly, we discovered that our practical day-to-day workloads compressed far better than this synthetic benchmark would suggest, often getting patch sizes on the order of 0.5-1% of the input file. We may one day add the mechanism suggested in this article to s3patch.

PS: Try s3patch - it's free, and it works for buckets in 16 S3 regions.

Notes

- Yeah, yeah: we could embrace the cloud and run our build and deploy pipelines in AWS CodeBuild. For quick iterations on proofs of concept, we wanted to avoid that heavy machinery if possible.

- Yeah, yeah: we could extract our third-party dependencies into a base layer using AWS Lambda Layers. The effort that entails starts to destroy the illusion of zero-effort serverless computing, though.

- By contrast, the gzip and zstd compression formats support the --rsyncable flag, which partially mitigates this concern.